Know Your Brain

Modern science has revealed some extremely valuable, albeit somewhat upsetting, truths about the human brain. Many, many experiments have conclusively proved that our brains work far less rationally than we think they do.

For example, a famous experiment showed that, under the right circumstances, someone can dress up in a gorilla costume, walk right into your field of vision, jump up and down and beat their chest, and you won't even see them. All it takes is the right kind of mental distraction. (This has important ramifications; it means that nobody can ever be certain that they wouldn't forget their baby in the car, and it is incumbent on all young parents to implement some sort of automated checking system.)

It's also been repeatedly proven that you can be 100% certain that you saw something which you couldn't possibly have seen. Millions of people are certain that they saw Tom Cruise jump up and down on Oprah's couch, declaring his love for Katie Holmes. But it never happened. Similarly, in a survey of people's responses as to where they were when they heard about 9/11, it turned out that many people completely (but with certainty) misremembered.

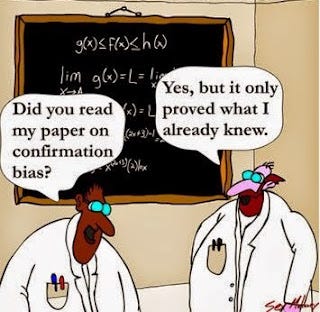

There are many other ways in which our minds do not work as well as we believe them to. Several have been discussed in two fascinating books, The Invisible Gorilla and Predictably Irrational. One of the most pernicious of these inbuilt human mental flaws is Confirmation Bias. This refers to how we search for, interpret, favor, and recall information in a way that confirms or supports our preexisting bias.

In one experiment at Stanford, students were divided into two groups according to whether they believed that capital punishment does or does not discourage crime. Each group was given two sets of scientific papers, one set of which brought evidence that capital punishment does discourage crime, and one set of which brought evidence that it doesn't have such an effect. (Unbeknownst to the students, both sets of papers were fabricated for the purpose of the experiment, and were equal in the strength of the "evidence" that they offered.) When questioned, the students who were originally in favor of capital punishment being effective found the papers to that effect to be much more convincing, and were even strengthened in their belief. Whereas the students who were originally of the view that capital punishment does not help, found the papers to that effect much more convincing, and were strengthened in their belief.

In the modern era of mass media and social media, we are bombarded with thousands of reports and articles and perspectives, and we choose which ones we rate as convincing. We might think that we are being objective, but it is very likely that we are simply succumbing to Confirmation Bias. We search for, interpret, favor, and recall information in a way that confirms or supports our preexisting bias.

Of course, not everyone is equally subject to Confirmation Bias. While we are all vulnerable to a certain degree, there are those who are less affected, and those who are more affected. How can you tell if you are particularly affected? I think that there is pretty straightforward way to know, at least with regard to elections.

Elections are (frequently) binary choices between two people. However, these binary choices are between two people with a large number of associated people, who differ in a thousand ways, with a million different ramifications. And many of these ramifications can have long-term effects which cannot be predicted with any certainty, and may even have conflicting effects. To be sure, it is possible that one of these choices is overall vastly superior to the other. But with such a staggering array of factors, what are the odds that every single one of them fits neatly into the same binary column of what is good and bad?

So if you believe that every single article, every report, every argument, in favor of your preferred candidate is convincing, and every single article, report and argument in favor of the other guy is not at all convincing; if you believe that there is every reason to vote for your guy and not a single reason to vote for the other guy; if you believe that anyone who votes for the other guy is absolutely, irredeemably stupid or evil - then it is overwhelmingly likely that you suffer from severe Confirmation Bias.

(And there may turn out to be some further evidence to convince you. If you suffer from severe Confirmation Bias, you probably also believe that the evidence is overwhelmingly in favor of your candidate winning by a substantial majority. In the eventuality that your candidate fails to do so, you will have clear evidence that you indeed suffer from Confirmation Bias. And you can extrapolate from that that all your deep certainty that the Other Guy is absolutely utterly terrible, is probably likewise not so well-founded.)

It's also helpful to realize that other people suffer from confirmation bias, too; and therefore the reason why they are voting in a way that seems entirely incomprehensible to you is not because they are absolutely evil or utterly stupid, but rather because they had a certain inclination in a certain direction regarding certain aspects of person or policy difference, and interpreted everything else in light of that.

We are all human, and we are thus all flawed. But at least we have the capacity to learn about our flaws, and to be aware of them. That is the first step to compensating for them.